1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

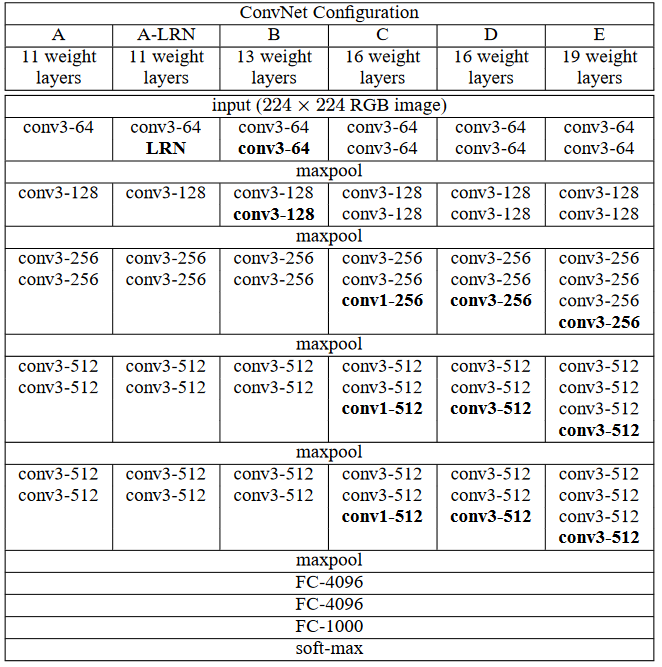

| class VGG16(nn.Module):

def __init__(self):

super(VGG16,self).__init__()

self.relu = nn.ReLU()

self.pool = nn.MaxPool2d((2,2), (2,2))

self.dropout = nn.Dropout(0.5)

self.flatten = nn.Flatten()

self.conv1_1 = nn.Conv2d(3, 64, (3,3), (1,1), (1,1))

self.conv1_2 = nn.Conv2d(64, 64, (3,3), (1,1), (1,1))

self.conv2_1 = nn.Conv2d(64, 128, (3,3), (1,1), (1,1))

self.conv2_2 = nn.Conv2d(128, 128, (3,3), (1,1), (1,1))

self.conv3_1 = nn.Conv2d(128, 256, (3,3), (1,1), (1,1))

self.conv3_2 = nn.Conv2d(256, 256, (3,3), (1,1), (1,1))

self.conv4_1 = nn.Conv2d(256, 512, (3,3), (1,1), (1,1))

self.conv4_2 = nn.Conv2d(512, 512, (3,3), (1,1), (1,1))

self.linear1 = nn.Linear(7*7*512, 4096)

self.linear2 = nn.Linear(4096, 4096)

self.linear3 = nn.Linear(4096, 1000)

def forward(self, x):

x = self.relu(self.conv1_1(x))

x = self.relu(self.conv1_2(x))

x = self.pool(x)

x = self.relu(self.conv2_1(x))

x = self.relu(self.conv2_2(x))

x = self.pool(x)

x = self.relu(self.conv3_1(x))

x = self.relu(self.conv3_2(x))

x = self.relu(self.conv3_2(x))

x = self.pool(x)

x = self.relu(self.conv4_1(x))

x = self.relu(self.conv4_2(x))

x = self.relu(self.conv4_2(x))

x = self.pool(x)

x = self.relu(self.conv4_2(x))

x = self.relu(self.conv4_2(x))

x = self.relu(self.conv4_2(x))

x = self.pool(x)

x = self.flatten(x)

x = self.relu(self.linear1(x))

x = self.dropout(x)

x = self.relu(self.linear2(x))

x = self.dropout(x)

x = self.relu(self.linear3(x))

return x

|